Introduction: Recent events have cast a spotlight on the risks inherent in traditional multi-tenant database architectures. Modern SaaS platforms often house all customer data in a single, massive multi-tenant data store. This creates an “all or nothing” scenario in security: if that central store is breached, the damage is rarely confined to a single account – it’s usually a wholesale compromise of large segments of the user basemimirsec.com. The recent “MongoBleed” vulnerability (CVE-2025-14847) underscored this risk: a single flaw in MongoDB’s centralized server allowed attackers to leak sensitive information from server memory across tenant boundariestenable.com.

The MongoBleed Wake-Up Call: Centralized Processing = Cross-Tenant Risk

In December 2025, MongoDB disclosed a critical memory leak vulnerability that exemplifies the dangers of centralized data processing. Dubbed MongoBleed, this flaw in MongoDB’s server (in how it handled compressed network messages) could allow a remote, unauthenticated attacker to read chunks of uninitialized memory from the database processtenable.com. In effect, an attacker could send a specially crafted request and have the MongoDB server spill sensitive data from its heap – including credentials, session tokens, keys, and other secrets – without ever logging intenable.comvaronis.com. The root cause was akin to the infamous Heartbleed bug: a length-handling error in the server’s compression routine that led to memory over-read and data leakagevaronis.com.

Because MongoDB’s architecture processes all tenants’ data in one server memory space, MongoBleed turned into a cross-tenant nightmare. Any SaaS platform running a vulnerable MongoDB instance faced the prospect that an attacker (or even a malicious or compromised tenant) could extract fragments of other tenants’ data that happened to be in the server’s memory. Multi-tenant systems are supposed to segregate customer data logically, but a low-level bug like this ignores those logical boundaries – the database server doesn’t distinguish whose data is in RAM when it leaks it. As a result, a single exploit could monkey-branch from one tenant’s context into another’s data. In the words of one security analysis, when multi-tenant stores are breached, it’s often a “wholesale” exposure rather than an isolated incidentmimirsec.com. MongoBleed starkly demonstrated that centralized processing = centralized risk: one vulnerability in a shared engine can cascade into a breach of many customers’ information at once.

MongoDB’s recommended mitigations (patching or disabling the vulnerable compression, restricting network access, etc.) are reactive measures. The deeper lesson for CTOs, CEOs, and CISOs is that architecture matters. To truly contain breach impact in a SaaS environment, we need to rethink the data platform design itself. This is where BrunnrDB comes in – turning the MongoBleed lesson into an opportunity to eliminate entire classes of cross-tenant attacks through an innovative architecture.

BrunnrDB’s Architectural Deep Dive: Shard‑on‑User‑Access + Browser-Side Enforcement

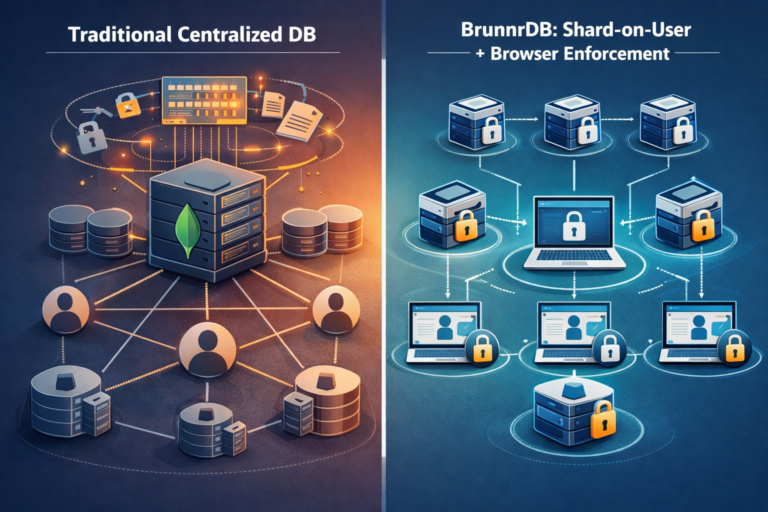

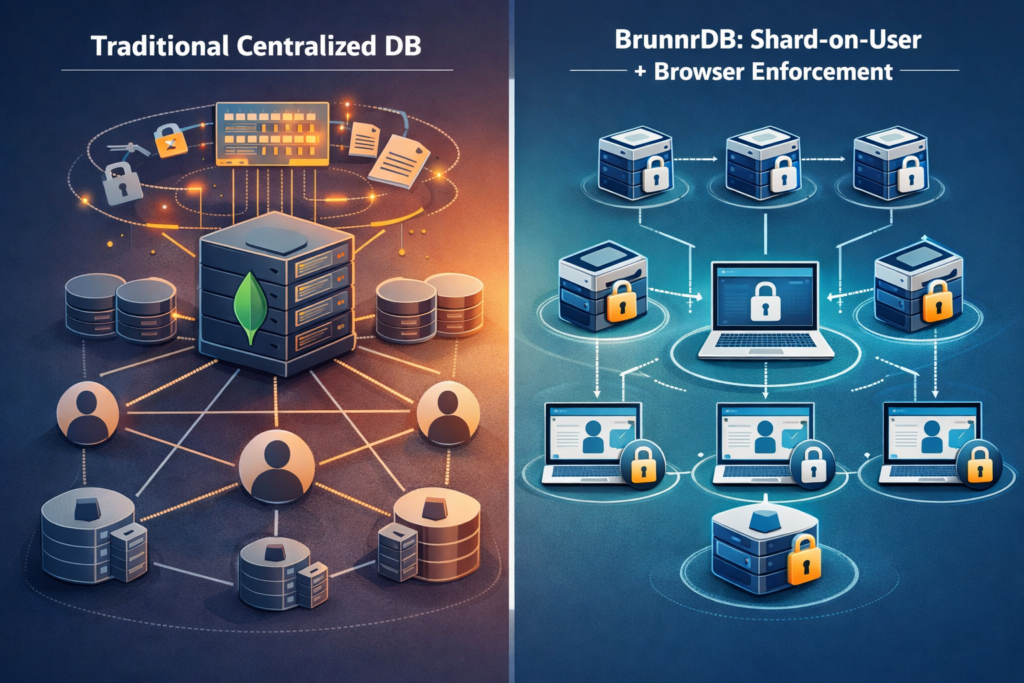

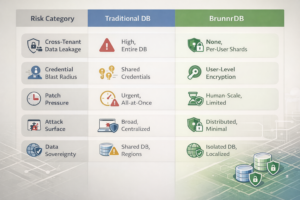

BrunnrDB is built on a fundamentally different paradigm than traditional databases like MongoDB. It implements what Mímir Security (BrunnrDB’s creators) call a “Shard on User Access” modelmimirsec.com – a data architecture where both the data partitioning and the enforcement logic are aligned with individual user or tenant boundaries. In simple terms, BrunnrDB shards data by user/tenant and pushes critical computation and security enforcement out to the end-user’s browser, rather than centralizing all processing on the server. The result is a system where the database server never has enough context – either cryptographically or structurally – to jump from the data one user is allowed to see into another user’s datamimirsec.com. Let’s break down the key elements of this architecture:

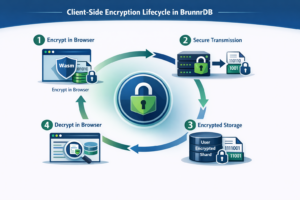

Client-Side Encryption and Computation: In BrunnrDB, sensitive data is encrypted and processed at the edge – in the end-user’s browser or device – before it ever touches the server. For example, when a user submits or queries data, that data is packaged and encrypted in a secure WebAssembly runtime in the browser, under keys that are derived from the user’s credentials or access rightsmimirsec.com. The server receives only opaque, authenticated ciphertext along with minimal metadata (such as which shard it belongs to or indexing tags). All the heavy lifting of parsing, compression, or even filtering sensitive fields can be done client-side. By the time data reaches the central store, it’s unintelligible to the server. This data-in-use minimization means the server never sees raw plaintext to manipulate – dramatically shrinking the attack surface for memory leaks or injection attacks. Even a seemingly trivial operation like data compression can be handled in safer ways; for instance, compressing already-encrypted data or performing compression in the client, so the server never decompresses untrusted input. In short, BrunnrDB’s server is blind to the sensitive content by designmimirsec.com.

Per-User (and Per-Group) Shards: Instead of one giant monolithic database, BrunnrDB splits the dataset into thousands of micro-shards, each defined by fine-grained access boundariesmimirsec.com. A “shard” in BrunnrDB corresponds to a security domain: it could be as narrow as a single user’s private data, or a small defined group’s shared records, or a specific tenant organization’s slice. The granularity can be tailored, but the guiding principle is that each shard contains only the data that a given user or cohort is permitted to accessmimirsec.com. Crucially, each shard is encrypted with its own key, unique to that user or group, and the database server never possesses those keysmimirsec.com. This means even though many shards might reside in the same physical database cluster for operational convenience, they remain cryptographically isolated from each other – the server cannot decrypt or merge them at will. The “shard key” lives on the client side (or in a secure key management service that only yields keys to authenticated clients under strict policy). From a security perspective, this is akin to giving every tenant or user their own vault within a bank – even if it’s the same vault room, each vault has a separate key that the bank itself doesn’t hold.

No Shared Query Context (Lateral Movement Eliminated): In BrunnrDB’s design, there is no single query that traverses data across different user shards without going through a user-specific context. Traditional databases execute queries in a global context – a poorly coded query or injection can accidentally pull in records from other tenants if checks fail. BrunnrDB flips this model. The server’s query layer is only capable of operating within the boundaries of a single shard at a time, because it literally doesn’t have references or pointers that join across shardsmimirsec.com. There is no structural path for an attacker to pivot from one user’s data to another’s through the servermimirsec.com. Even if an attacker compromises the query processing layer, they can only assemble results from the shards for which they have a valid decryption token or key – everything else remains cipher-text that cannot be interpretedmimirsec.com. For example, an attacker who somehow gains access to a BrunnrDB server cannot perform a “table scan” to dump all data, because the data is partitioned and linked only by encrypted identifiers. They would see a collection of encrypted chunks tied to user IDs, with no way to aggregate them into meaningful information. In essence, lateral movement at the data layer is off the table – the database cannot be tricked into revealing what it never structurally links together.

Keys Scoped Per User, Not Per Database: Traditional databases and even many sharded systems often rely on a handful of powerful credentials (like a root DB password or a cluster key) that, if compromised, open up broad swaths of data. BrunnrDB rejects that model. There is no single “god” key that unlocks everything – not even a DBA or cloud admin has one – because keys are scoped to logical access domains (individual shards) rather than to the whole servicemimirsec.com. If an attacker somehow steals a decryption key, they gain access to at most one shard’s worth of data – e.g. one user’s records – and nothing elsemimirsec.com. Stealing another user’s data would require compromising a completely different key, which is stored and managed separately. This fine-grained key management means compromising one credential no longer equates to owning the entire database. It’s the principle of least privilege applied at the cryptographic level. Additionally, key management in BrunnrDB follows the user lifecycle: keys can be rotated or revoked per user or group without affecting others, and access audits can be conducted in terms of “who can see what” rather than which database or table is affectedmimirsec.commimirsec.com. The net effect is that even insiders or cloud operators cannot bypass the system’s tenant isolation – they would need to collude with user-side keys or break strong encryption for each shard one by one.

By combining these elements – user-scoped shards, client-side encryption, and distributed query enforcement – BrunnrDB ensures that the database server itself is mostly a dumb storage node from a security standpoint. It never sees sensitive plaintext and never handles more than one tenant’s (or user’s) data in any given operation. This architecture aligns perfectly with Zero Trust principles: assume the server (or the network) could be compromised at any time, and design it such that even a full server breach yields minimal data. In practice, BrunnrDB’s “Shard on User Access” model means the maximum blast radius of a breach is dramatically reduced: instead of losing an entire database or many tenants’ data, you’d at worst lose one shard’s worth – which might be just one user’s information.

MongoBleed, Averted: Why This Attack Fails on BrunnrDB

Had MongoDB been architected like BrunnrDB, the MongoBleed incident would simply not have been the crisis it was. Here’s why BrunnrDB’s design neutralizes that class of vulnerability:

Isolated Memory Per Tenant: MongoBleed exploited the fact that MongoDB’s shared server process was decompressing data for all tenants in one space. In BrunnrDB, there is no equivalent shared memory pool of sensitive data from multiple tenants. Each shard’s data is segregated, and any server-side memory operates on encrypted objects specific to one shard at a time. An attack that leaks “uninitialized memory” from a BrunnrDB server might retrieve a few bytes of cipher-text from the current user’s shard – effectively gibberish without that user’s key – and nothing else. There’s no scenario where an attacker could trick the server into dumping another tenant’s plaintext secrets, because that plaintext isn’t even present on the server. As BrunnrDB’s philosophy states, the server cannot assemble more data in plaintext than what the active user is authorized to seemimirsec.com. In the MongoBleed case, this means the bug would have found no juicy data to leak: the memory might contain only encrypted data (indecipherable) or at worst some fragment of one user’s recent activity. The huge cross-tenant credential dump that was possible with MongoDB’s design is off the table.

No Central Parser to Exploit: The MongoBleed flaw was in the MongoDB server’s network message parser (specifically in zlib compression handling). BrunnrDB’s architecture drastically reduces such parsing on the server. Compression, serialization, and other complex transformations are either done on the client or applied only within the context of an already-encrypted payload. In effect, BrunnrDB’s server is not eagerly unpacking and inspecting the internals of messages for all tenants – it doesn’t need to, since it mostly sees ciphertext and simple routing metadata. This reduction in server logic means fewer opportunities for bugs like MongoBleed to even arise. And if a similar vulnerability did exist in the server’s handling of a message, the damage is contained to that message’s scope (one shard) rather than being able to bleed across different users’ data. BrunnrDB turns multi-tenant exploitation from a buffet into a dead end.

Cryptographic Boundaries Stop Lateral Movement: Even if an attacker somehow compromised BrunnrDB’s server software (akin to gaining arbitrary read access), they would face cryptographic roadblocks at every turn. They could not use one tenant’s access to query another tenant’s data, because there’s no shared context to pivot into and everything outside the current shard remains encrypted with unknown keysmimirsec.com. Think of it as each tenant’s data being in a locked safe deposit box inside the database – MongoBleed was like finding a master key that opened all the boxes at once due to a flaw in the vault door, whereas BrunnrDB ensures no such master key exists in the vault. There’s simply no mechanism by which a flaw can automatically escalate to a cross-tenant data leak; the architecture inherently limits the blast radius of any attack to the smallest unit of data (the shard).

To put it succinctly, BrunnrDB’s design ensures that neither the server nor an attacker who compromises it can ever assemble more plaintext than the current user is legitimately allowed to seemimirsec.com. MongoBleed showed that a traditional database server could inadvertently assemble and expose far more than any one user should see. BrunnrDB’s mix of client-side enforcement and per-user sharding means even a zero-day memory leak or an insider attack cannot break out of its sandbox – one user’s sandbox, one user’s data. This is a categorical prevention of the entire class of cross-tenant leakage attacks exemplified by MongoBleed.

Benefits for SaaS Platforms: Breach Containment, Reduced Attack Surface, and Data Sovereignty

For executives evaluating data platforms, BrunnrDB’s approach is not just an academic exercise in security architecture – it translates into very tangible business benefits for SaaS providers and their customers:

Breach Containment by Design: BrunnrDB drastically limits the “blast radius” of any breach. Instead of a catastrophic leak of your entire customer database, an incident would be confined to a single shard – often just one user or one team’s data. This containment is baked into how data is stored and encrypted, not just an after-the-fact access policy. In practice, this could be the difference between a minor security incident and a headline‐grabbing disaster. As one expert put it, sharding with user-level granularity can transform a breach scenario from “catastrophic all-at-once exposure” into “bounded, auditable, and recoverable incidents”mimirsec.com. You can confidently tell your customers (and regulators) that even if the worst happens, only a sliver of data could ever be exposed, never the whole treasure trove.

Reduced Attack Surface: By pushing computation and enforcement to the client side, BrunnrDB minimizes the amount of complex code running on the server – especially the kind of code (parsers, query engines, etc.) that tends to harbor critical vulnerabilities. The BrunnrDB server isn’t busy juggling multiple tenants’ plaintext data or interpreting wide-ranging queries; it’s mainly storing encrypted blobs and serving them to authorized clients. Fewer responsibilities mean fewer avenues for exploitation. The MongoBleed bug arose from a feature (compression) in the server’s networking logic. In BrunnrDB, such features are either unnecessary or safely handled at the edges, meaning the core data store is simpler and more robust. For a CISO, this reduction in attack surface – essentially having a “dumb” data layer that an attacker can’t do much with – is a huge win. It also alleviates the patching scramble: there are just fewer critical patches to worry about when your server is not a kitchen-sink of features. In short, BrunnrDB’s distributed approach shrinks the targetable code and capabilities of the cloud side, making it far harder for an attacker to find a foothold or escalate an attack.

Tenant Data Sovereignty and Compliance: BrunnrDB’s per-tenant (or per-user) sharding also offers strong alignment with data sovereignty and regulatory compliance needs. Because each shard is an independent unit, you can choose to store shards in specific geographic regions or isolated environments to meet local laws (e.g. keep EU customer data on EU soil, US data in the US, etc.). You can even give tenants control over their own shard keys if desired, ensuring that you as the provider cannot access their plaintext without consent – a powerful feature for customers in regulated industries. BrunnrDB’s architecture makes it easier to prove cryptographic isolation between tenants. For example, you can demonstrate that a breach in one region’s shard cannot possibly expose data from another regionmimirsec.com. Auditors and savvy customers love such clear-cut separation. It moves the conversation from “trust us, we logically segregate your data” to “here’s the structural and cryptographic proof that your data stands alone.” In an era of strict data protection regulations, this level of tenant data sovereignty – where each customer’s data is essentially in its own vault – is not just a nice-to-have, it’s becoming a must-have. You’re giving each tenant ownership over their data’s fate, rather than intermingling it with others’.

Beyond these headline benefits, BrunnrDB’s model also aligns with modern Zero Trust security frameworks and the principle of least privilege. It forces you to answer tough questions (in a good way) about your architecture: “What is the maximum unit of compromise in our system? A table? A whole database? Or just one user’s chunk of data?” BrunnrDB lets you confidently answer that in the smallest termsmimirsec.commimirsec.com. It also integrates with existing cloud security practices – you can still use AWS’s tenant-isolation or VPC segregation and layer BrunnrDB on top for cryptographic separationmimirsec.commimirsec.com. Think of it as defense-in-depth: even if your application layer or network layer fails, the data layer remains segmented and secure.

Conclusion: A New Paradigm for Executive Consideration

The MongoBleed incident was a sobering reminder that even trusted, widely-used data platforms can harbor vulnerabilities that cut across tenants and customers. For CEOs, CTOs, and CISOs steering SaaS companies, the takeaway is clear: to protect highly aggregated cloud data, we must shift from just protecting the perimeter to fundamentally restructuring and fortifying the data layer itself. BrunnrDB exemplifies this shift. Its shard-on-user-access architecture, combining end-user-side enforcement with per-tenant cryptographic isolation, ensures that a breach in one part of the system cannot metastasize into a platform-wide failure. It delivers breach containment, reduces the attack surface, and guarantees each tenant’s data sovereignty in a way that legacy architectures simply cannot match.

Adopting a platform like BrunnrDB is both a technical and a strategic decision. Technically, it means embracing modern techniques like client-side processing and zero-trust data storage. Strategically, it sends a message to customers and partners that your SaaS prioritizes the security of their data by design. In an environment where trust is a competitive differentiator, using an architecture that prevents cross-tenant data leaks outright can be a game-changer. No CTO or CISO wants to explain to their board how a single bug led to leaking many customers’ data. By leveraging BrunnrDB’s approach, such explanations become a thing of the past – because that kind of breach simply isn’t possible.

In summary, MongoBleed taught the industry a painful lesson, and BrunnrDB is one answer to it. For executives evaluating data platforms, the question to ask is: do we want to keep relying on a centralized model that can fail big, or do we invest in a modern architecture that contains failures to the very small? BrunnrDB makes a compelling case that the future of SaaS data security lies in the latter – where even if an attacker gets in, the blast radius is minimal, controlled, and confined to one tenant at a timemimirsec.com. That is the kind of resilience that turns security from a constant worry into a competitive advantage.

References:

Varonis Threat Labs – “MongoBleed (CVE‑2025‑14847): Risk, Detection & How Varonis Protects You.” (Dec 29, 2025)varonis.comvaronis.com

Tenable Blog – “CVE-2025-14847 (MongoBleed): MongoDB Memory Leak Vulnerability Exploited in the Wild.” (Dec 29, 2025)tenable.com

Mímir Security Blog – “Sharding to Contain the Blast Radius of Data Breaches.” (Dec 5, 2025)mimirsec.commimirsec.com

Mímir Security Blog – “The Hidden Risk in Cloud Databases: When a Vendor Breach Becomes Your Breach.” (Oct 30, 2025)mimirsec.commimirsec.com

Mímir Security Blog – BrunnrDB Architecture Details (Client-Side Encryption & Shard on User Access model).mimirsec.commimirsec.com

Mímir Security Blog – BrunnrDB Lateral Movement Prevention & Layered Security.mimirsec.commimirsec.com

Mímir Security Blog – Compliance and Data Localization Benefits of Sharding.mimirsec.com

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

MongoBleed CVE-2025-14847 exploited in the wild | Tenable®

MongoBleed (CVE-2025-14847): Risk, Detection & How Varonis Protects You

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

Sharding to Contain the Blast Radius of Data Breaches – Mimir Security

MongoBleed (CVE-2025-14847): Risk, Detection & How Varonis Protects You

The Hidden Risk in Cloud Databases: When a Vendor Breach Becomes Your Breach – Mimir Security

The Hidden Risk in Cloud Databases: When a Vendor Breach Becomes Your Breach – Mimir Security